Prompt engineering for marketers: The foundational guide

The term "prompt engineering" gets thrown around a lot, often reduced to simple conversational tricks. But in a professional automation context, it's a serious skill. It is the process of building instructions for a machine by structuring commands, guiding critical context, controlling tool usage, and setting clear constraints on the output. This is how you'll get predictable and scalable results from AI agents.

This is where people often get stuck. The excitement for AI quickly turns to disappointment when the output is bland, incorrect, or misses the point entirely. Often, the problem isn't the LLM, it's the instruction you give it. The quality of your AI's output is a direct reflection of the quality of your input.

This is the skill gap that prompt engineering solves. It’s the difference between asking a vague question and giving a precise, expert-level command.

First, what is an LLM actually doing?

To effectively leverage a large language model (LLM), it’s crucial to understand its core operational mechanics.

- First, an LLM is fundamentally a sophisticated prediction engine. At its core, its primary function is to predict the next most likely word (or "token") in a sequence. It generates responses not through human-like reasoning but by calculating the most probable continuation based on the vast patterns in its training data. When you provide a prompt, you are initiating a statistical process, not a cognitive one.

- Second, an LLM has no inherent knowledge of your specific business context. It doesn't "understand" your unique marketing channels, "know" your quarterly business objectives, or have any form of consciousness. It simply processes the input you provide and generates a statistically probable output. This limitation is precisely what makes your prompt so critical: it must supply the essential context, instructions, and constraints that the model inherently lacks.

- Third, an AI agent possesses a basic form of reasoning. Within a tool like n8n, an agent can follow a chain of thought to execute multi-step tasks. However, this reasoning is not human intuition. For it to work, you must provide clear, step-by-step instructions. The agent can't guess what you mean. This is where precise prompt engineering becomes essential, as it provides the logical framework the agent needs to follow to get from problem to solution.

While this guide focuses on applying these principles within n8n, they are universal. They also apply whether you are using ChatGPT or Claude, or a different automation tool like Zapier.

The core components of a powerful prompt

A well-crafted prompt is built from clear, distinct components that work together to guide the AI. By breaking your request down into these parts, you can move from simple questions to detailed instructions that deliver reliable results.

1. Role: Assigning a persona to the AI

Your instruction should be highly specific. Give the model a detailed professional role, complete with the company context and team. For example:

"You are a senior paid search bid and budget management analyst in the performance marketing team of Semrush Enterprise."

2. Context: Providing the necessary background

This is where you provide the business goal, the marketing environment, or specific BigQuery data the AI needs to complete its task. Without context, the AI has to guess, and it will almost always guess wrong.

Example: "Our marketing targets a specific audience: B2B SaaS marketing managers. We’re not focused on short-term revenue; our north star is maximizing 12 Month LifeTime value (12MLTV). We have monthly budgets per channel, but we're flexible to reallocate spend toward channels, GEOs and campaigns with the highest incremental impact."

3. Task: The specific, clear instruction

Your task instruction should be direct and unambiguous. Use strong verbs that describe exactly what you need the AI to do. Instead of "look at this data," use "You're building a weekly performance report, where you'll report in a brief and actionable story. Prioritize your storyline based on the channel, subchannel, geo, campaign type and metric hierarchy highlighted in the ##5. Channels & KPIs section."

4. Format: Defining the structure of the output

Defining the output format is essential for automation, especially in n8n. It ensures the data is returned in a predictable structure that downstream nodes in your workflow can use without errors.

Example: "Provide the output as a JSON object with the keys 'channel', 'total_ltv', and 'ltv_cac_ratio'."

Advanced tips for prompt reliability

Moving from good prompts to great, production-ready prompts requires a deeper understanding of how LLMs process information. These tips will help you build more robust and reliable AI agents for your marketing automations.

The context window is your budget

The context window is the maximum amount of text (your prompt and the AI's response) that the model can process at one time. Every word, number, or piece of code in your prompt uses a part of this limited budget. Chat history (memory) and detailed instructions for tools are also re-injected into the context for every new message, consuming a large portion of the window. Treat your context window like a budget and only include the information that is absolutely essential for the task.

Instructing your n8n agent on tool use

Simply making a tool available to an AI agent is not enough. The agent does not inherently know what the tool is for or when it should be used, creating a high risk that it will ignore the tool or use it incorrectly. Your prompt must explicitly define the tool's purpose and the exact conditions under which it should be used.

get_campaign_strategic_contextVague prompt: "You can use

get_campaign_strategic_context to find information about campaign strategy and promotions."Precise prompt: "Before analyzing or interpreting the performance of any campaign, your first step must be to use the

get_strategic_context tool to retrieve the latest strategic brief. This context, which includes target audiences, recent promotions, and primary goals, is essential for an accurate analysis. Always frame your quantitative analysis with this qualitative information."Thinking step-by-step: Decomposing complex tasks

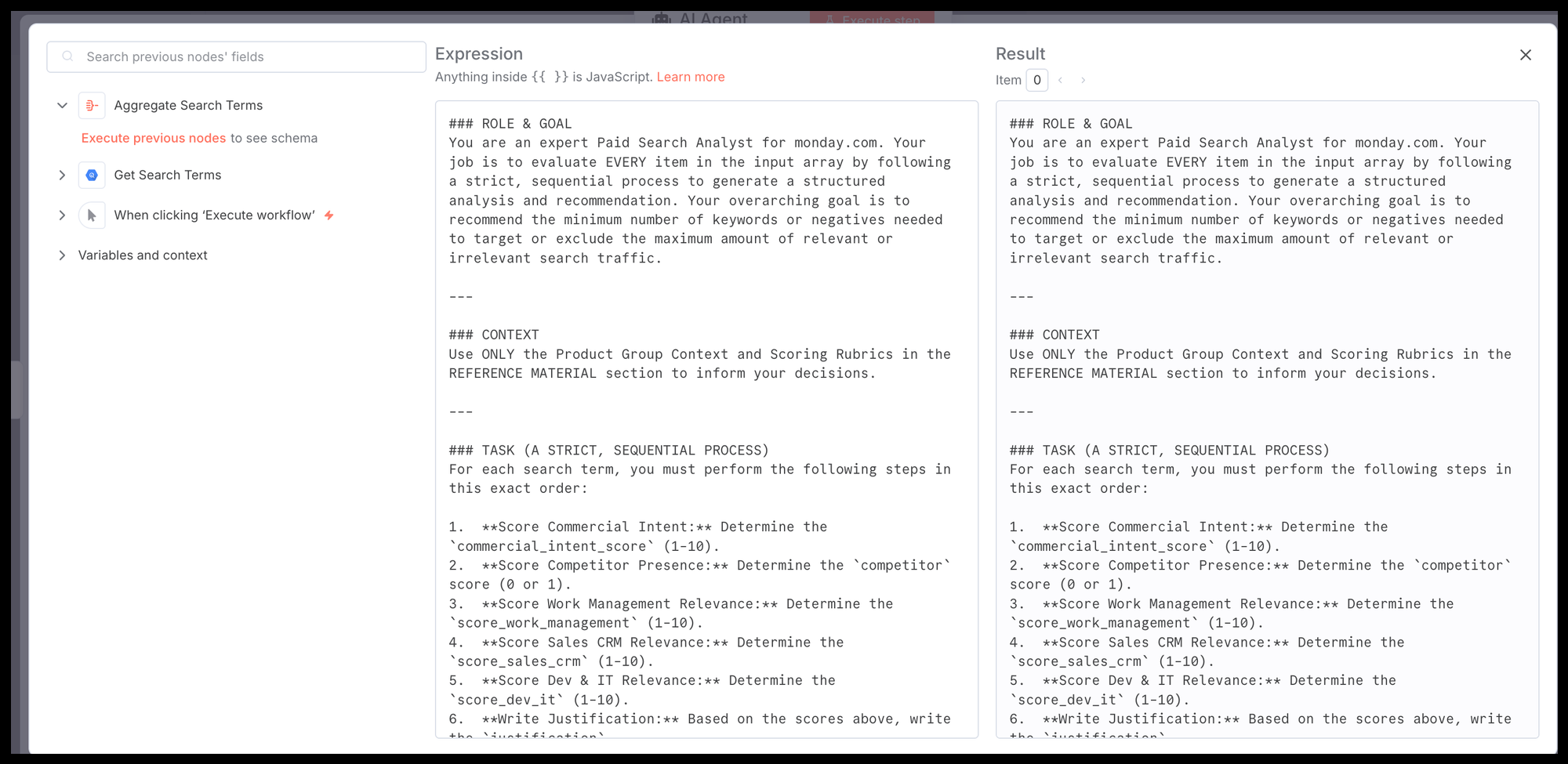

For complex analytical tasks, asking for the final answer in one go is unreliable. It's far more effective to give the LLM a sequence of explicit, ordered steps to follow. This technique, often called chain-of-thought prompting, forces the model to "show its work" and guides its reasoning process.

Instead of asking a broad question like "which channel is best?", you instruct the agent on how to arrive at the conclusion:

- First, for each channel, calculate the LTV:CAC ratio.

- Second, calculate the LTV pacing against its target.

- Third, evaluate each channel based on these two metrics.

- Finally, synthesize these findings into a summary.

This mimics how a human analyst would approach the problem, reducing the risk of logical errors and making the final output much more trustworthy and predictable for automation.

The cost of failure: Controlling your agent’s creativity

When prompts are inconsistent, they create business costs. A misformatted JSON output can break an entire n8n workflow. A creative but inaccurate summary of performance data can lead to poor marketing decisions and wasted budget.

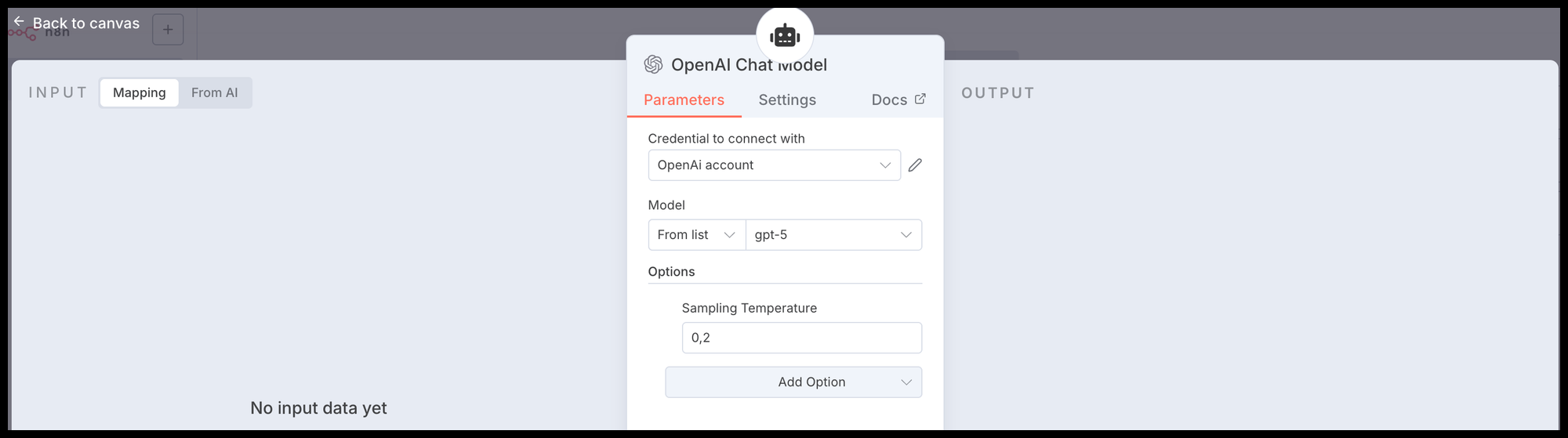

For analytical tasks, you need strict, rigid prompts that leave no room for interpretation. For creative tasks like blog writing, you can use looser prompts to encourage variation. You can control this with a setting called "temperature," which acts like a creativity thermostat for your AI. A low temperature (e.g., 0.0-0.2) is ideal for data processing or factual tasks, while a higher temperature (e.g., 0.8-1.0) is better for brainstorming or creative generation.

Crafting Reliable Prompts: Structure and Meta-Prompting

To get consistent, high-quality results from AI, mastering the art of the prompt is essential. This involves not only what you ask but also how you ask it. Two powerful techniques can significantly improve the reliability of your AI-powered workflows: using Markdown for structural clarity and leveraging AI itself to refine your prompts.

Use Markdown for Structural Clarity

Just as clear headings help a human reader scan a document, simple formatting helps an LLM understand the structure of your request. Using Markdown to delineate the different components of your prompt is a simple but powerful technique to improve reliability.

It creates a visual and structural separation between your instructions, your data, and your formatting requirements. This is especially useful for complex prompts, as it helps prevent the model from getting "lost in the middle" of a dense block of text.

🤖 Example of a Structured Agentic Prompt

You are a Senior AI Performance Analyst operating within an n8n workflow.

Your goal is to provide a concise, root-cause analysis of our monthly marketing performance. You must deliver a factual report that will be used by a human strategist for final recommendations. Do not add speculative commentary; stick to the data and the causal relationships defined in your context.

---

### 1. Tools

You have access to one tool to gather the data you need:

- `data_retrieval.getNode("fetch_metrics")`: This function fetches the latest performance data for a given list of metric IDs.

---

### 2. Analytical Context & Rules

You MUST use the following information as your single source of truth for metric definitions and the business logic connecting them. The Causal Hierarchy is your guide for deconstructing performance.

**Primary KPIs:**

* `ltv_cac`

* `numrr`

**Metric Definitions:**

* **id: `ltv_cac`** -> display_name: "LTV:CAC"

* **id: `numrr`** -> display_name: "NUMRR"

* **id: `costs`** -> display_name: "Costs"

* **id: `first_payments`** -> display_name: "First Payments"

* **id: `average_check`** -> display_name: "Average Check"

* **id: `cvr_click_to_payment`** -> display_name: "CVR (Click to Payment)"

**Causal Hierarchy:**

* The performance of `ltv_cac` is driven by `numrr` and `costs`.

* The performance of `numrr` is driven by `first_payments` and `average_check`.

* The performance of `first_payments` is driven by `cvr_click_to_payment`.

---

### 3. Your Analytical Playbook

You must follow these steps in the exact order given:

1. **Review Context:** First, internalize the `Analytical Context & Rules`. Understand the `Primary KPIs`, the definition of each metric, and the `Causal Hierarchy` that dictates your analysis flow.

2. **Gather Data:** Use the `data_retrieval.getNode("fetch_metrics")` tool one time to retrieve all metric IDs listed in the `Metric Definitions`. Request the data for the last full month, including their month-over-month changes.

3. **Synthesize & Report:** Generate a narrative summary of performance.

- Start your analysis with the two `Primary KPIs`.

- Use the `Causal Hierarchy` to deconstruct their performance, explaining the "why" behind any significant month-over-month changes.

- For example, if `numrr` decreased, determine if it was caused by a drop in `first_payments` or `average_check`, and state your finding clearly.

- Keep your analysis concise and focused on the data's story.

Evaluating your agents performance in n8n

Engineering a great prompt is the first step, but how do you prove it works reliably? This is where systematic evaluation comes in. Within n8n, you can build an evaluation workflow to scientifically test your AI agent's performance.

The process is straightforward: you create an evaluation dataset containing various inputs and the corresponding "ground truth" or ideal outputs you expect. The workflow then runs your agent against each input and compares the agent's actual response to your predefined ideal response. This allows you to run test on the quality and consistency of your prompts, ensuring that any changes you make lead to measurable improvements and don't accidentally break something else.

Leverage AI for Prompt Refinement

You don't have to craft the perfect prompt from scratch. One of the most effective methods is to use an LLM as your co-pilot to refine your own instructions. You can start with a simple idea and ask the AI to transform it into a well-structured, production-ready prompt. This process, often called "meta-prompting," involves giving the AI a prompt that tells it how to write another, better prompt.

Using your prompt in an n8n AI agent

This brings us to applying these principles in our core platform. In n8n's AI nodes, you can separate the permanent instructions from the dynamic input.

The Role, Context, and formatting rules are perfect for the System Instructions field. This acts as the agent's core programming, defining its persona and operational rules. The user's dynamic data, like our performance table, goes into the User Prompt field.

To apply the principles of prompt engineering theory, we will now put them into practice within a workflow to build and edit blog articles.